From Impossible to Inevitable: How Maestro Built a Production Database Server Through Conversation

Building a Redis-compatible server the traditional way: 6-12 months, 4-6 engineers, $500,000.

Building it with Maestro: 70 hours, 1 engineer working part-time, $10,000.

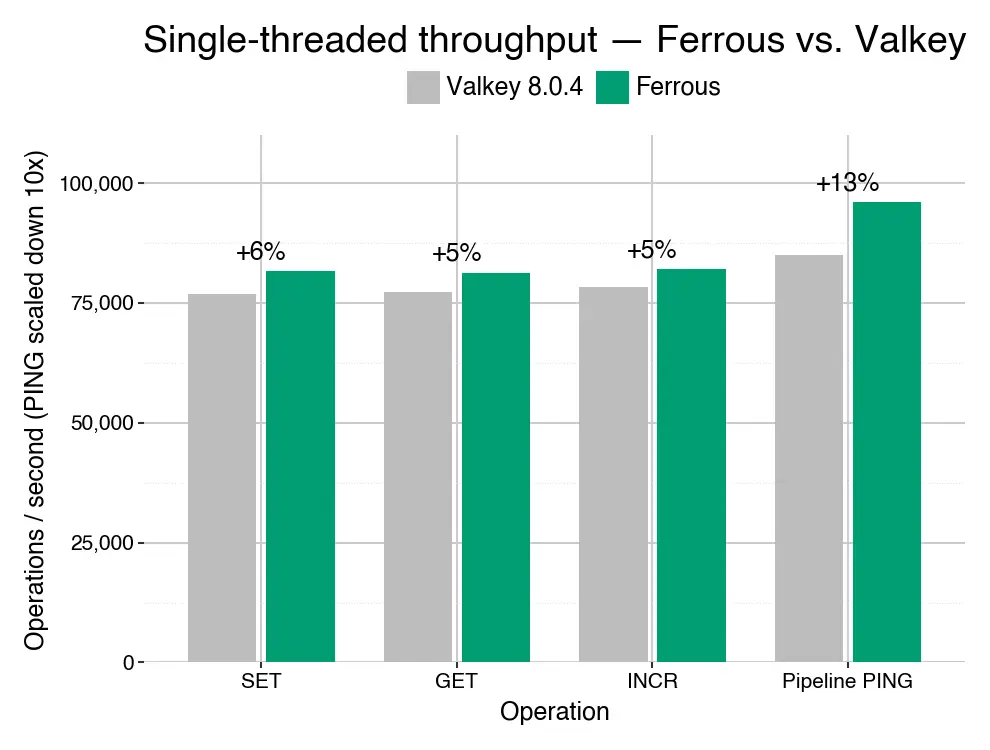

Same result? No. The Maestro version runs faster. SET operations: 6% faster than Valkey. GET operations: 5% faster. Pipeline PING: 13% faster. 35,000 lines of production code, implementing 114 Redis commands, all written by AI through conversation.

You can inspect the code here .

This isn’t about making developers more productive. It’s about redefining what they can pull off. Here’s what happened when we decided to test that theory.

The Ferrous Experiment

We needed a real-world challenge to test what the Maestro system is capable of. Could our AI agent build a production database server? Not a toy with a few features, but a complete Redis-compatible system that handles real workloads.

The result was Ferrous: around 35,000 lines of code, of which 20,000 lines of Rust implementing 114 Redis commands and achieving over 80,000 operations per second. It consistently outperforms Valkey by 5-13% on core operations, and every single line was written by Maestro, iGent’s AI agent. The human engineer provided only architectural direction through conversation, never writing any implementation code whatsoever.

How Ferrous Actually Developed

To understand where Maestro differentiates itself from most systems that are aimed at building small-scale applications or demos, let’s walk through the development of Ferrous.

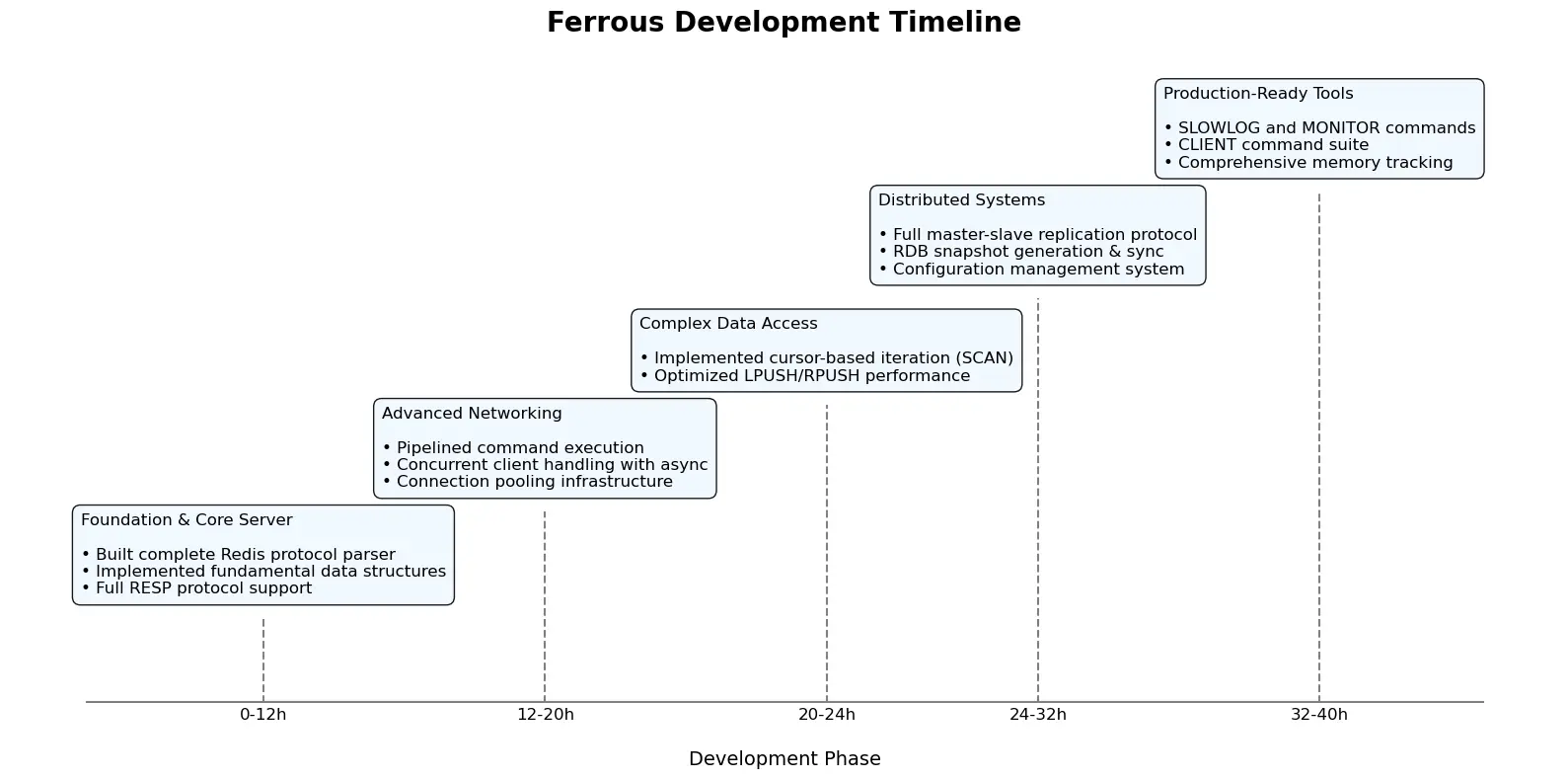

First, here is a graphical overview of the first 40 hours of development:

Here are more detailed examples of what took place at various points.

Starting Strong

From a single directive to build a Redis-compatible server, Maestro produced:

- Non-blocking TCP server with connection pooling

- Complete RESP protocol parser

- Core commands (GET, SET, DEL, EXISTS)

- Key-value storage with expiration

- Sorted sets on skip lists

- RDB persistence

- Pub/Sub messaging

This one-shot, initial version achieved 60% of Redis’ performance. In many cases, this might be enough, especially if it took a few weeks of engineering effort. For Maestro, this is just the starting point: a well-designed architecture that can be extended and optimised.

Learning Through Performance

By PR #3, Maestro identified that sequential command processing was bottlenecking throughput. Without being told to implement pipelining, it recognized the pattern and implemented batched execution. Throughput jumped from 60,000 to 250,000 operations per second.

PR #5 found a worse problem. List operations were crawling at 457 ops/sec for LPUSH and 131 for RPUSH. The AI diagnosed inefficient memory allocation patterns. It didn’t patch the problem. It reorganized the entire data structure. Result: 81,000 ops/sec for LPUSH, 75,000 for RPUSH. That’s 178x and 580x improvements.

Here, the throughput numbers are the north star. Maestro verifies them directly: it runs benchmarking, sees the results, and reflects. They serve as a powerful, verifiable signal for progress, much more effective than any user steering.

Making Hard Choices

Instead of having one big lock for the entire key-value store, Maestro divided the store into independent shards, each with its own lock. This allows multiple threads to operate on different keys simultaneously without blocking each other. Maestro picked 16 shards as a compromise between concurrency and overhead. It used deterministic hashing for consistent key routing. These are real engineering decisions.

const SHARDS_PER_DATABASE: usize = 16;

fn get_shard_index(&self, key: &[u8]) -> usize {

// FNV-1a hash function for speed and distribution

const FNV_OFFSET: u64 = 0xcbf29ce484222325;

const FNV_PRIME: u64 = 0x100000001b3;

let mut hash = FNV_OFFSET;

for &byte in key {

hash ^= byte as u64;

hash = hash.wrapping_mul(FNV_PRIME);

}

(hash % SHARDS_PER_DATABASE as u64) as usize

}The Lua Detour

Between PR #10 and PR #27, Maestro implemented Lua scripting support for Ferrous by building a complete Lua virtual machine in Rust, including a bytecode interpreter, garbage collection, table implementation, and coroutine support.

While this was making steady progress, it was becoming convoluted as it was a poor match for building in safe Rust. As Maestro’s main goal was to complete the Redis database, it made the decision to integrate the proven MLua library instead. In the real world, a team might carry on with this VM because of sunk cost fallacy. With Maestro, other than the cost of the tokens, nothing is lost. Coding with a highly capable agent like Maestro means easier micro-pivots, and generally being lighter on your feet, less attached to existing solutions.

The Art of Direction

If Maestro is highly agentic, and highly capable of generating code, where does the human fit in? Their role is shifted upward: no debugging, no helper functions, no glue code or copying and pasting. They provide strategic insight: “Focus on production readiness over features”, “Ensure Redis protocol compliance”, “Optimize for concurrent access”, “Maintain backward compatibility”.

For Ferrous, these directives shaped thousands of decisions Maestro made during its implementation.

As we were building, a consistent conversation pattern emerged:

- Human identifies goal: “We need replication”

- AI explores solutions: Researches strategies, understands trade-offs

- AI implements: Master-slave replication with PSYNC, RDB sync, failover

- Human validates: “Replication works but performance dropped”

- AI optimizes: Finds synchronous bottleneck, implements async propagation

We’ve found that leaning into this structure produced the best results. Of course, we’re still uncertain how this collaboration will ultimately end up looking, and are actively exploring various ways of working with Maestro.

Performance Proves the Point

Beyond Just Working

Building a working system is one thing. Building one that beats established alternatives is another. While building software, Maestro can take the initiative to benchmark and optimize its performance. It measured Ferrous performance extensively and compared it to Valkey, with the key metrics graphed below, and full results at this link

These are consistent, reproducible advantages achieved through AI optimization.

The Stream Transformation

The most dramatic improvement came in Streams. Maestro’s initial implementation clocked in at 500 ops/sec. Then, it found a double-locking pattern causing cache problems:

// Before: nested locks

pub struct Stream {

inner: Arc<RwLock<StreamInner>>,

}

// After: cache-coherent design

pub struct Stream {

data: Mutex<StreamData>, // Single lock

length: AtomicUsize, // Lock-free reads

last_id_millis: AtomicU64,

}Here, a relatively small change has a large ROI because the understanding of the underlying architecture is there. Reads are separated from writes, no more double-locking, atomics for metadata. That gets us to 30,000 ops/sec, a 60x improvement.

What This Means

Traditional software development follows a familiar pattern. Requirements. Design documents. Implementation sprints. Code reviews. Debugging. Optimization. This process takes months or years for complex systems.

The Ferrous approach inverts this. One human provides strategic direction. AI handles all implementation. The bottleneck shifts from coding speed to architectural clarity.

This shift affects orgs differently, at the same time reflecting the maturity of their code and their willingness to adopt new approaches. Resource-constrained startups can now tackle infrastructure projects previously reserved for funded companies. Custom databases, message queues, or API gateways become feasible when implementation costs drop 100x. Large organizations struggling with legacy modernization also have a new path. Instead of multi-year rewrites, they can direct AI to rebuild systems while maintaining compatibility.

Perhaps most importantly, being able to produce software in this way changes how we think of and distribute responsibilities for technical roles. Management evolves from coordinating implementation to maintaining architectural vision. The question changes from “How many engineers?” to “What architecture serves our needs?” For the individual contributor, the Ferrous development process highlights which skills become increasingly valuable:

- System Architecture: Understanding how components interact, where bottlenecks form, and which patterns scale effectively

- Performance Literacy: Recognizing cache coherency issues, lock contention, and optimization opportunities

- Strategic Thinking: Knowing when to optimize versus when to refactor, when to build versus when to integrate

- Quality Standards: Insisting on comprehensive testing, benchmarking, and validation

- Clear Communication: Articulating technical requirements and architectural vision unambiguously

Notably absent from this list are syntax memorization, framework expertise, and manual step-through debugging techniques. The mechanical aspects of programming that consume most junior and mid-level engineering time become largely irrelevant.

Check our Claims

Ferrous is open source and available here . Anyone can clone the repository, run the benchmarks, examine the code, and verify these claims independently. This transparency is intentional because extraordinary claims require extraordinary evidence.

But Ferrous isn’t a product. It’s validation of a different approach to software. When a single engineer directs AI to build systems that beat established alternatives, when 70 hours of conversation replaces 10,000 hours of implementation, when architectural vision becomes more valuable than coding ability, the industry has changed.

The toy demos were merely practice exercises while the technology matured. Now, with long-context models and proper scaffolding, AI can build real production systems that perform at the highest levels.

Every engineering organization now faces a critical strategic choice. The question isn’t whether to adopt AI tools but whether they understand the fundamental difference between AI that helps write code and AI that builds complete systems. The distinction between tools that accelerate current processes and tools that replace them entirely has become the key strategic consideration.

The era of toy demos is over. The era of real systems has begun. Sign up here to get access to Maestro and try it for yourself.